Every once in a while I end up doing something a little crazy.

At the very least, I tend to have a hard time convincing others that there is any need at all to devote time to my newly-prioritized task.

The latest had me writing a program to parse a list of 65,534 domains names in order to determine which ones were registered, and therefore perhaps were being used to host a website.

As a copywriter you do not ordinary find yourself attempting to get to the bottom of questions of this type. But recently I have begun branching out into the world of media buying. Specifically media buying involving the Google display network (GDN) which serves up an estimated 10 billion ad impressions every day. In a moment you will see how this lead me back to my roots as a programmer.

Life As A Domain Parking Assistant

Writing code is something I have done off an on since the 1980s.

First it was scientific programming, then application building, and finally it devolved to website programming of one type or another.

In my capacity as an application builder many years ago I got the chance to write the code for a robot that daily parsed the records of (if I remember correctly) dozens of whois servers which respond to requests about a given domain by sending back information about the registrar associated with the domain, the owner of the domain, and several other tidbits relating to administration of that domain on the web.

At the time I was working for a domain-parking company which was in the practice of parsing these ever-changing domain records in the hope of spotting dissatisfied domain name owners who had just moved a block of domains from one domain-parking competitor to another. Evidence of the move could be found in the whois record. The company would then activate its salesmen who would attempt to swoop in and convince the owner of the domains to come host with us.

Occasionally the company might even try to buy one or more domains outright if it looked as though the domains might be able to generate a decent amount of “type in” traffic. That is, if for example you owned the domain socks.com, which people might attempt to visit directly if they wanted to buy socks, the company would attempt to acquire the domain and put paid ads on the home page. This may sound like a very shallow business model, but it turns out to be a very profitable one, which is why I was eventually tasked to overhaul the badly written code responsible for finding domains potentially vulnerable to acquisition.

Fortunately I am very good at doing this kind of work and the revised application churned out leads daily to the delight of the company’s sales people.

From Coder To Copywriter And Back Again

The last time I was regularly paid to write code was in 2005. Today I write more copy than code, but I still dabble when the opportunity calls for it.

So when I found myself presented with an “exclusion” list of more than 65 thousand domains that I was told would needlessly eat away my client’s Google Adwords budget without delivering any results (leads) I remembered I had parsed domain records in the past. Lots of them. There was no reason I could not do it again, and being the skinflint that I am I was inclined to attempt to use the best possible version of that exclusion list to keep the ad costs down on my client’s campaign.

Here’s what you need to know about exclusion lists.

Before you activate your ad campaigns and allow them to burn through your ad budget, Google Adwords will allow you to upload a list of “placement” URLs – the places where your ads should not be shown.

Ordinarily it takes time to build such a list. Potentially years. Typically you would monitor your campaign performance, identify the placements which are costing you money but not producing results, and you would add those placements to your exclusion list. So you can see why any shortcut to building your exclusion list could be valuable.

My list of excluded placements was offered as a bonus for having taken the AdSkills Google display advertising course. AdSkills is a reputable and skilled media buying company which I assume makes the bulk of its money today teaching what it used to offer as services for clients.

I would have used their massive exclusion list too, had the number of domains in the list not exceeded Google’s upload limit of 65 thousand. Although the list did upload, the process also spewed out copious error messages when the limit was finally exceeded. So that got me thinking about how to trim back the size of the list. To be able to upload with causing error messages I would need to toss out some portion of those 65 thousand plus domains.

But which ones should go?

How To Trim An Exclusion List

After I thought about it a little it seemed obvious that any domain that was no longer registered, and therefore was no longer being used to host a web site capable of displaying Google adverts, no longer needed to be on the exclusion list. In order to remove those (now non registered) domains I would need to inspect the whois record associated with each domain and determine its registration status.

In principal this is fairly straightforward. While the programming effort required to get the job done is not trivial, it is a long way from seeming insurmountable.

And yet because I am lazy, and because it has been a while since I have done this kind of work, I turned to the web for some freely-available PHP code to do a whois server lookup.

The code I found came with an assortment of whois server URLs for different top level domains, e.g. .com, .net, .org, etc.

However, I did notice that the author who wrote the code made the (incorrect) assumption that all whois servers return a uniformly formatted set of data. If true, this would make it easy to determine whether or not a domain is registered. But in practice whois servers tend to follow the logic and competence of the programmers employed to implement them. Non-uniformity of the returned whois responses and incorrect spellings make it difficult to do a string search for the line containing the registrar information.

Additionally, because there seems to be no standard way for a registrar to say “no matching result” for a domain name search it is necessary to be aware of the particular way each of them does it.

So to prime my domain-querying robot I had to do a manual search on each of the whois services, one for a domain known to be registered (such as abc.com), and one for a domain known not to be registered (e.g. someimaginarything.com). I made the assumption that the format of the information which came back (the found or not found response) would be the same regardless of the particular domain queried. Usually this meant that if the string “Registrar:” was present then the domain was registered. For the non registered domains the string would look more like “NOT FOUND”, “nothing found”, “no match”, “Object does not exist” or some such variant.

Some whois servers actively block requests, but surprisingly few, even when querying from a home connection. This means a whois server-querying robot run on someone’s home machine (like mine) can fetch whois records on a more or less continuous basis. To lower my risk of being flagged as an aggressive whois data harvester, and having my I.P. address blocked by one or more of these servers, I throttled my domain requests by introducing a one-tenth of a second delay between each request.

Many, Many Hours Later…

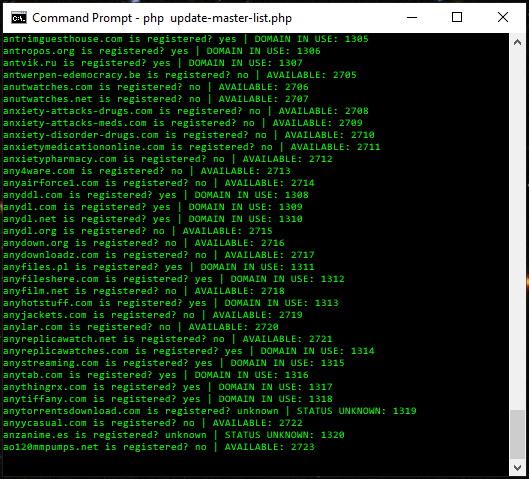

In the following image you can see what the output of the robot looks like running on my PC. This is at the early stage when the program is running through the domains in alphabetical order. The numbers at the end of each of the lines tell me the current size of the tables I am updating. So when this snapshot was captured there were 2723 domains located which appear to be unregistered, and 1320 which were registered.

If that information is reliable it means that roughly two out of every three of the domains in my exclusion list no longer needs to be there.

In the end, of the original 65,534 domains in the Adskills exclusion list, fully 42,451 of them returned a “not registered” result and therefore can probably be removed without it making any difference whatsoever to the performance and value of the exclusion list.

That left 22,312 noteworthy domains on the AdSkills exclusion list.

The end result of this is that I was left with upwards of 40K slots which could be filled with other placements to exclude from my ad campaigns. As it happens, while researching the use of placement exclusion in Google display campaigns I came across other freely-available exclusion lists:

- WEBMECHANIX.COM

(Article/list from 2019) Directly provides its top 2200+ exclusion URLs, and in total in excess of 55K of them if you are prepared to “pay with a tweet”

(I was not, but I did snatch the 2200+ domain names listed in the article). - PPCPROTECT.COM

(Article/list from 2021) Provides 31K web placements, 30K mobile app placements, and 8K Youtube channels which cover in excess of 3 million video placements.

This requires you opt into their newsletter, but I was happy to do so and make use of the web placements. - DIRECTOM.COM

(Article/list from 2021) Another list of web placements. In excess of 70K of them.

Perhaps if I had come across that last reference of 70K exclusions I might never have written my own whois server-querying program to evaluate the worthiness of a given list. But by the time I did discovered it I had already parsed the Adskills, Webmechanix, and PPCProtect exclusion lists, so I stopped there.

Comments are closed.